Feedforward neural networks

In this example, we implement a softmax classifier network with several hidden layers. Also see the regression example for some relevant basics.

We again demonstrate the library with the MNIST database, this time using the full training set of 60,000 examples for building a classifier with 10 outputs representing the class probabilities of an input image belonging to one of the ten categories.

Loading the data

We load the data and form the training, validation, and test datasets. The datasets are shuffled and the input data are normalized.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: |

|

val MNISTtrain : Dataset = Hype.Dataset X: 784 x 59000 Y: 1 x 59000 val MNISTvalid : Dataset = Hype.Dataset X: 784 x 1000 Y: 1 x 1000 val MNISTtest : Dataset = Hype.Dataset X: 784 x 10000 Y: 1 x 10000

1:

|

|

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: 32: 33: 34: 35: 36: 37: 38: 39: 40: 41: 42: 43: 44: 45: 46: 47: 48: 49: 50: 51: 52: 53: 54: 55: 56: 57: 58: 59: |

|

Defining the model

We define a neural network with 3 layers: (1) a hidden layer with 300 units, followed by ReLU activation, (2) a hidden layer with 100 units, followed by ReLU activation, (3) a final layer with 10 units, followed by softmax transformation.

1: 2: 3: 4: 5: 6: 7: 8: 9: |

|

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: |

|

Freely implementing transformation layers

Now let's have a closer look at how we implemented the nonlinear transformations between the linear layers.

You might think that the instances of reLU in n.Add(reLU) above refer to a particular layer structure previously implemented as a layer module within the library. They don't. reLU is just a matrix-to-matrix elementwise function.

An important thing to note here is that the activation/transformation layers added with, for example, n.Add(reLU), can be any matrix-to-matrix function that you can express in the language, unlike commonly seen in many machine learning frameworks where you are asked to select a particular layer type that has been implemented beforehand with it's (1) forward evaluation code and (2) reverse gradient code w.r.t. layer inputs, and (3) reverse gradient code w.r.t. any layer parameters. In such a setting, a new layer design would require you to add a new layer type to the system and carefully implement these components.

Here, because the system is based on nested AD, you can freely use any matrix-to-matrix transformation as a layer, and the forward and/or reverse AD operations of your code will be handled automatically by the underlying system. For example, you can write a layer like this:

1: 2: 3: 4: |

|

which will be a normalization layer, scaling the values to be between 0 and 1.

In the above model, this is how the softmax layer is implemented as a mapping of the vector-to-vector softmax function to the columns of a matrix.

1:

|

|

In this particular example, the output matrix has 10 rows (for the 10 target classes) and each column (a vector of size 10) is individually passed through the softmax function. The output matrix would have as many columns as the input matrix, representing the class probabilities of each input.

Weight initialization schemes

When layers with learnable weights are created, the weights are initialized using one of the following schemes. The correct initialization would depend on the activation function immediately following the layer and would take the fan-in/fan-out of the layer into account. If a specific scheme is not specified, the InitStandard scheme is used by default. These implementations are based on existing machine learning literature, such as "Glorot, Xavier, and Yoshua Bengio. "Understanding the difficulty of training deep feedforward neural networks." International conference on artificial intelligence and statistics. 2010".

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: |

|

Training

Before training, let's visualize the weights of the first layer in a grid where each row of the weight matrix of the first layer is shown as a 28-by-28 image. It is an image of random weights, as expected.

1: 2: |

|

Hype.Neural.Linear

784 -> 300

Learnable parameters: 235500

Init: ReLU

W's rows reshaped to (28 x 28), presented in a (17 x 18) grid:

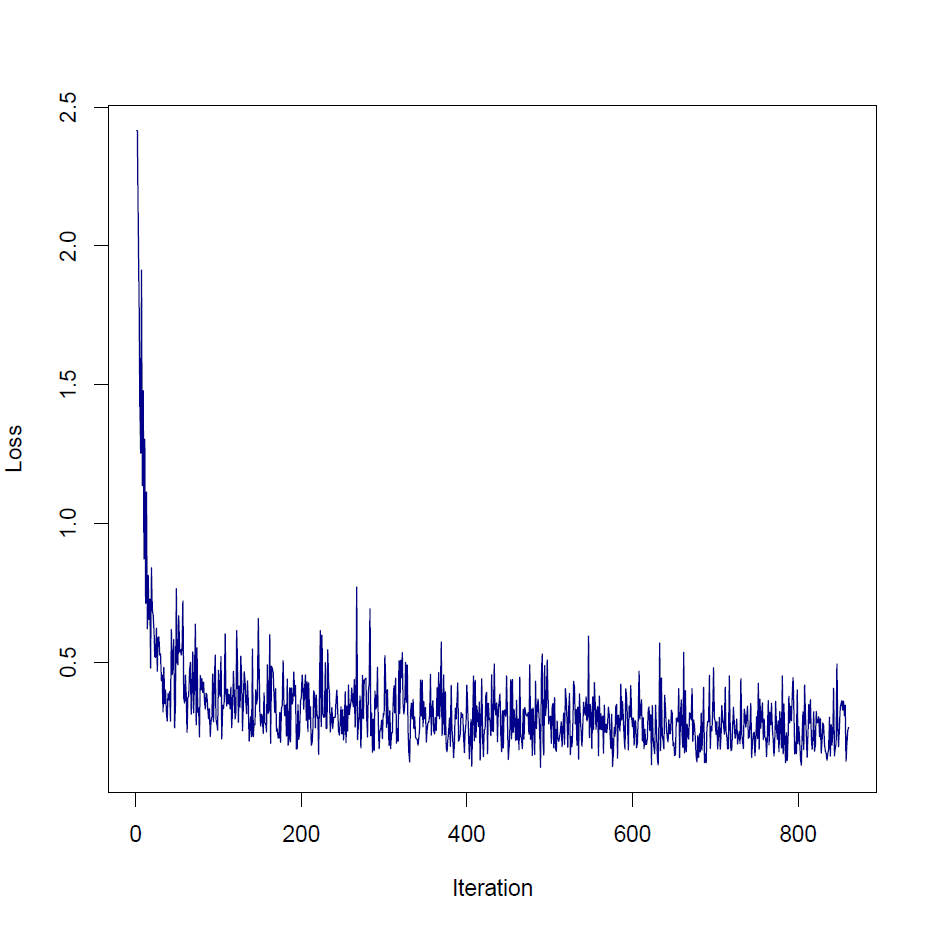

Now let's train the network with the training and validation datasets we've prepared, using RMSProp, Nesterov momentum, and cross-entropy loss.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: |

|

[12/11/2015 22:42:07] --- Training started [12/11/2015 22:42:07] Parameters : 266610 [12/11/2015 22:42:07] Iterations : 1180 [12/11/2015 22:42:07] Epochs : 2 [12/11/2015 22:42:07] Batches : Minibatches of 100 (590 per epoch) [12/11/2015 22:42:07] Training data : 59000 [12/11/2015 22:42:07] Validation data: 1000 [12/11/2015 22:42:07] Valid. interval: 10 [12/11/2015 22:42:07] Method : Gradient descent [12/11/2015 22:42:07] Learning rate : RMSProp a0 = D 0.00100000005f, k = D 0.899999976f [12/11/2015 22:42:07] Momentum : Nesterov D 0.899999976f [12/11/2015 22:42:07] Loss : Cross entropy after softmax layer [12/11/2015 22:42:07] Regularizer : L2 lambda = D 9.99999975e-05f [12/11/2015 22:42:07] Gradient clip. : None [12/11/2015 22:42:07] Early stopping : Stagnation thresh. = 400, overfit. thresh. = 100 [12/11/2015 22:42:07] Improv. thresh.: D 0.995000005f [12/11/2015 22:42:07] Return best : true [12/11/2015 22:42:07] 1/2 | Batch 1/590 | D 2.383214e+000 [- ] | Valid D 2.411374e+000 [- ] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:08] 1/2 | Batch 11/590 | D 6.371681e-001 [↓▼] | Valid D 6.128169e-001 [↓▼] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:08] 1/2 | Batch 21/590 | D 4.729548e-001 [↓▼] | Valid D 4.779414e-001 [↓▼] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:09] 1/2 | Batch 31/590 | D 4.792733e-001 [↑ ] | Valid D 3.651254e-001 [↓▼] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:10] 1/2 | Batch 41/590 | D 2.977416e-001 [↓▼] | Valid D 3.680202e-001 [↑ ] | Stag: 10 Ovfit: 0 [12/11/2015 22:42:10] 1/2 | Batch 51/590 | D 4.242567e-001 [↑ ] | Valid D 3.525212e-001 [↓▼] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:11] 1/2 | Batch 61/590 | D 2.464822e-001 [↓▼] | Valid D 3.365663e-001 [↓▼] | Stag: 0 Ovfit: 0 [12/11/2015 22:42:11] 1/2 | Batch 71/590 | D 6.299557e-001 [↑ ] | Valid D 3.981607e-001 [↑ ] | Stag: 10 Ovfit: 0 ... [12/11/2015 22:43:21] 2/2 | Batch 521/590 | D 1.163270e-001 [↓ ] | Valid D 2.264248e-001 [↓ ] | Stag: 50 Ovfit: 0 [12/11/2015 22:43:21] 2/2 | Batch 531/590 | D 2.169427e-001 [↑ ] | Valid D 2.203927e-001 [↓ ] | Stag: 60 Ovfit: 0 [12/11/2015 22:43:22] 2/2 | Batch 541/590 | D 2.233351e-001 [↑ ] | Valid D 2.353653e-001 [↑ ] | Stag: 70 Ovfit: 0 [12/11/2015 22:43:22] 2/2 | Batch 551/590 | D 3.425132e-001 [↑ ] | Valid D 2.559682e-001 [↑ ] | Stag: 80 Ovfit: 0 [12/11/2015 22:43:23] 2/2 | Batch 561/590 | D 2.768238e-001 [↓ ] | Valid D 2.412431e-001 [↓ ] | Stag: 90 Ovfit: 0 [12/11/2015 22:43:24] 2/2 | Batch 571/590 | D 2.550858e-001 [↓ ] | Valid D 2.726600e-001 [↑ ] | Stag:100 Ovfit: 0 [12/11/2015 22:43:24] 2/2 | Batch 581/590 | D 2.308137e-001 [↓ ] | Valid D 2.466903e-001 [↓ ] | Stag:110 Ovfit: 0 [12/11/2015 22:43:25] Duration : 00:01:17.5011734 [12/11/2015 22:43:25] Loss initial : D 2.383214e+000 [12/11/2015 22:43:25] Loss final : D 1.087980e-001 (Best) [12/11/2015 22:43:25] Loss change : D -2.274415e+000 (-95.43 %) [12/11/2015 22:43:25] Loss chg. / s : D -2.934685e-002 [12/11/2015 22:43:25] Epochs / s : 0.02580606089 [12/11/2015 22:43:25] Epochs / min : 1.548363654 [12/11/2015 22:43:25] --- Training finished

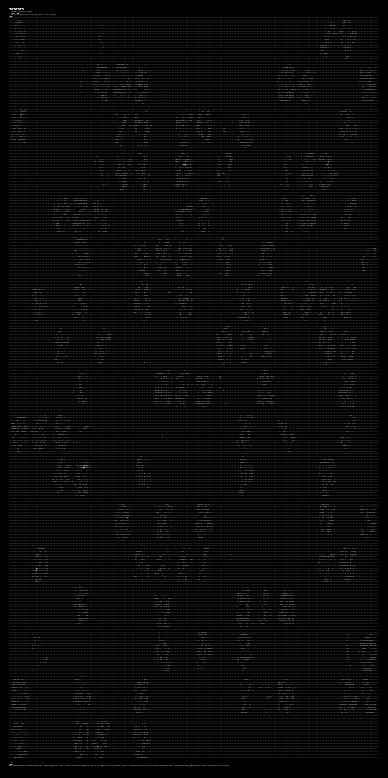

Now let's visualize the weights of the first layer in the grid. We see that the network has learned the problem domain.

1: 2: |

|

Building the softmax classifier

As explained in regression, we just construct an instance of SoftmaxClassifier with the trained neural network as its parameter. Please see the API reference and the source code for a better understanding of how classifiers are implemented.

1:

|

|

Testing class predictions for 10 random elements from the MNIST test set.

1: 2: |

|

val pred : int [] = [|5; 1; 9; 2; 6; 0; 0; 5; 7; 6|] val real : int [] = [|5; 1; 9; 2; 6; 0; 0; 5; 7; 6|]

Let's compute the classification error for the whole MNIST test set of 10,000 examples.

1:

|

|

val it : float32 = 0.0502999984f

The classification error is around 5%. This can be lowered some more by training the model for more than 2 epochs as we did.

Classifying a single digit:

1: 2: |

|

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: |

|

Classifying many digits at the same time:

1: 2: |

|

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: 32: 33: 34: 35: 36: 37: 38: 39: 40: 41: 42: 43: 44: 45: 46: 47: 48: 49: 50: 51: 52: 53: 54: 55: 56: 57: 58: |

|

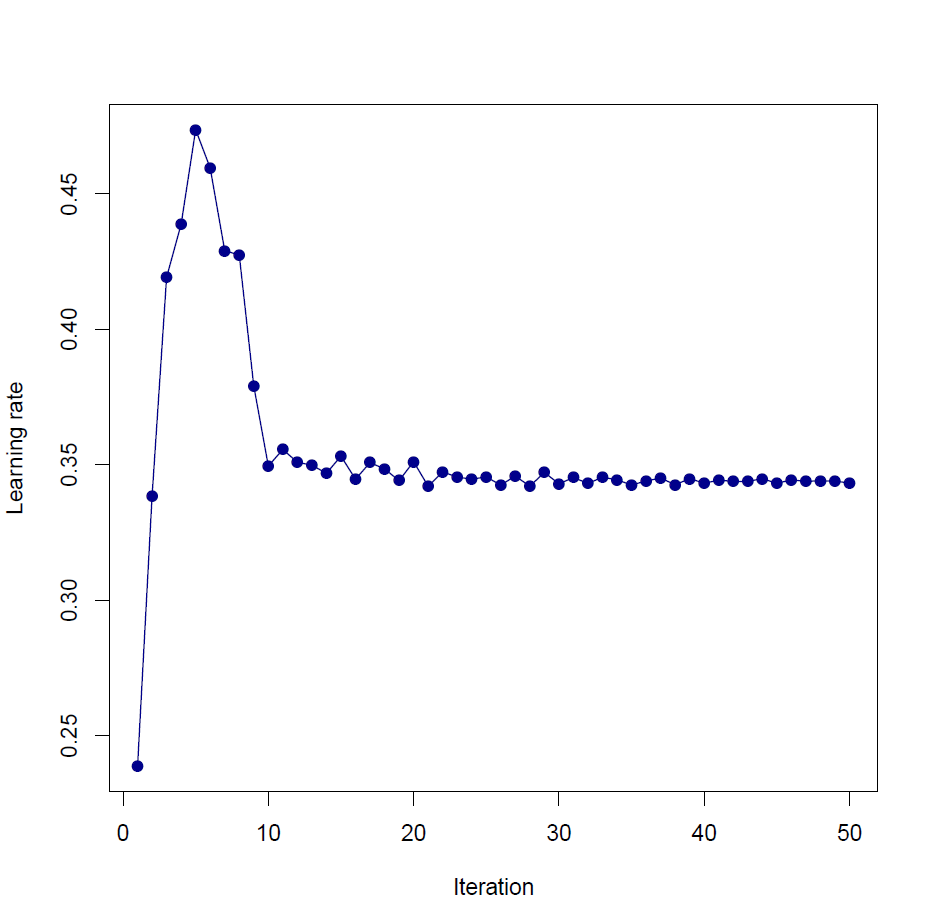

Nested optimization of training hyperparameters

As we've seen in optimization, nested AD allows us to apply gradient-based optimization to functions that also internally perform optimization.

This gives us the possibility of optimizing the hyperparameters of training. We can, for example, compute the gradient of the final loss of a training procedure with respect to the continuous hyperparameters of the training such as learning rates, momentum parameters, regularization coefficients, or initialization conditions.

As an example, let's train a neural network with a learning rate schedule of 50 elements, and optimize this schedule vector with another level of optimization on top of the training.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: |

|

Full name: Microsoft.FSharp.Compiler.Interactive.Settings.fsi

from DiffSharp.AD

from DiffSharp

Full name: FeedforwardNets.MNIST

type Dataset =

new : s:seq<DV * DV> -> Dataset

new : xi:seq<int> * y:DM -> Dataset

new : x:DM * yi:seq<int> -> Dataset

new : xi:seq<int> * yi:seq<int> -> Dataset

new : x:DM * y:DM -> Dataset

new : xi:seq<int> * onehotdimsx:int * y:DM -> Dataset

new : x:DM * yi:seq<int> * onehotdimsy:int -> Dataset

new : xi:seq<int> * onehotdimsx:int * yi:seq<int> * onehotdimsy:int -> Dataset

private new : x:DM * y:DM * xi:seq<int> * yi:seq<int> -> Dataset

member AppendBiasRowX : unit -> Dataset

...

Full name: Hype.Dataset

--------------------

new : s:seq<DV * DV> -> Dataset

new : x:DM * y:DM -> Dataset

new : xi:seq<int> * yi:seq<int> -> Dataset

new : x:DM * yi:seq<int> -> Dataset

new : xi:seq<int> * y:DM -> Dataset

new : x:DM * yi:seq<int> * onehotdimsy:int -> Dataset

new : xi:seq<int> * onehotdimsx:int * y:DM -> Dataset

new : xi:seq<int> * onehotdimsx:int * yi:seq<int> * onehotdimsy:int -> Dataset

static member LoadDelimited : filename:string -> DM

static member LoadDelimited : filename:string * separators:char [] -> DM

static member LoadImage : filename:string -> DM

static member LoadMNISTLabels : filename:string -> int []

static member LoadMNISTLabels : filename:string * n:int -> int []

static member LoadMNISTPixels : filename:string -> DM

static member LoadMNISTPixels : filename:string * n:int -> DM

static member VisualizeDMRowsAsImageGrid : w:DM * imagerows:int -> string

static member printLog : s:string -> unit

static member printModel : f:(DV -> DV) -> d:Dataset -> unit

Full name: Hype.Util

static member Util.LoadMNISTPixels : filename:string * n:int -> DM

static member Util.LoadMNISTLabels : filename:string * n:int -> int []

Full name: FeedforwardNets.MNISTtrain

Full name: FeedforwardNets.MNISTvalid

Full name: FeedforwardNets.MNISTtest

Full name: Microsoft.FSharp.Core.ExtraTopLevelOperators.printfn

Full name: FeedforwardNets.n

type FeedForward =

inherit Layer

new : unit -> FeedForward

member Add : f:(DM -> DM) -> unit

member Add : l:Layer -> unit

override Decode : w:DV -> unit

override Encode : unit -> DV

override Init : unit -> unit

member Insert : i:int * f:(DM -> DM) -> unit

member Insert : i:int * l:Layer -> unit

member Remove : i:int -> unit

...

Full name: Hype.Neural.FeedForward

--------------------

new : unit -> FeedForward

member FeedForward.Add : l:Layer -> unit

type Linear =

inherit Layer

new : inputs:int * outputs:int -> Linear

new : inputs:int * outputs:int * initializer:Initializer -> Linear

override Decode : w:DV -> unit

override Encode : unit -> DV

override Init : unit -> unit

override Reset : unit -> unit

override Run : x:DM -> DM

override ToString : unit -> string

override ToStringFull : unit -> string

...

Full name: Hype.Neural.Linear

--------------------

new : inputs:int * outputs:int -> Linear

new : inputs:int * outputs:int * initializer:Initializer -> Linear

| InitUniform of D * D

| InitNormal of D * D

| InitRBM of D

| InitReLU

| InitSigmoid

| InitTanh

| InitStandard

| InitCustom of (int -> int -> D)

member InitDM : m:DM -> DM

member InitDM : m:int * n:int -> DM

override ToString : unit -> string

Full name: Hype.Neural.Initializer

Full name: DiffSharp.Util.reLU

union case DM.DM: float32 [,] -> DM

--------------------

module DM

from DiffSharp.AD.Float32

--------------------

type DM =

| DM of float32 [,]

| DMF of DM * DM * uint32

| DMR of DM * DM ref * TraceOp * uint32 ref * uint32

member Copy : unit -> DM

member GetCols : unit -> seq<DV>

member GetForward : t:DM * i:uint32 -> DM

member GetReverse : i:uint32 -> DM

member GetRows : unit -> seq<DV>

member GetSlice : rowStart:int option * rowFinish:int option * col:int -> DV

member GetSlice : row:int * colStart:int option * colFinish:int option -> DV

member GetSlice : rowStart:int option * rowFinish:int option * colStart:int option * colFinish:int option -> DM

member ToMathematicaString : unit -> string

member ToMatlabString : unit -> string

override ToString : unit -> string

member Visualize : unit -> string

member A : DM

member Cols : int

member F : uint32

member Item : i:int * j:int -> D with get

member Length : int

member P : DM

member PD : DM

member Rows : int

member T : DM

member A : DM with set

member F : uint32 with set

static member Abs : a:DM -> DM

static member Acos : a:DM -> DM

static member AddDiagonal : a:DM * b:DV -> DM

static member AddItem : a:DM * i:int * j:int * b:D -> DM

static member AddSubMatrix : a:DM * i:int * j:int * b:DM -> DM

static member Asin : a:DM -> DM

static member Atan : a:DM -> DM

static member Atan2 : a:int * b:DM -> DM

static member Atan2 : a:DM * b:int -> DM

static member Atan2 : a:float32 * b:DM -> DM

static member Atan2 : a:DM * b:float32 -> DM

static member Atan2 : a:D * b:DM -> DM

static member Atan2 : a:DM * b:D -> DM

static member Atan2 : a:DM * b:DM -> DM

static member Ceiling : a:DM -> DM

static member Cos : a:DM -> DM

static member Cosh : a:DM -> DM

static member Det : a:DM -> D

static member Diagonal : a:DM -> DV

static member Exp : a:DM -> DM

static member Floor : a:DM -> DM

static member Inverse : a:DM -> DM

static member Log : a:DM -> DM

static member Log10 : a:DM -> DM

static member Max : a:DM -> D

static member Max : a:D * b:DM -> DM

static member Max : a:DM * b:D -> DM

static member Max : a:DM * b:DM -> DM

static member MaxIndex : a:DM -> int * int

static member Mean : a:DM -> D

static member Min : a:DM -> D

static member Min : a:D * b:DM -> DM

static member Min : a:DM * b:D -> DM

static member Min : a:DM * b:DM -> DM

static member MinIndex : a:DM -> int * int

static member Normalize : a:DM -> DM

static member OfArray : m:int * a:D [] -> DM

static member OfArray2D : a:D [,] -> DM

static member OfCols : n:int * a:DV -> DM

static member OfRows : s:seq<DV> -> DM

static member OfRows : m:int * a:DV -> DM

static member Op_DM_D : a:DM * ff:(float32 [,] -> float32) * fd:(DM -> D) * df:(D * DM * DM -> D) * r:(DM -> TraceOp) -> D

static member Op_DM_DM : a:DM * ff:(float32 [,] -> float32 [,]) * fd:(DM -> DM) * df:(DM * DM * DM -> DM) * r:(DM -> TraceOp) -> DM

static member Op_DM_DM_DM : a:DM * b:DM * ff:(float32 [,] * float32 [,] -> float32 [,]) * fd:(DM * DM -> DM) * df_da:(DM * DM * DM -> DM) * df_db:(DM * DM * DM -> DM) * df_dab:(DM * DM * DM * DM * DM -> DM) * r_d_d:(DM * DM -> TraceOp) * r_d_c:(DM * DM -> TraceOp) * r_c_d:(DM * DM -> TraceOp) -> DM

static member Op_DM_DV : a:DM * ff:(float32 [,] -> float32 []) * fd:(DM -> DV) * df:(DV * DM * DM -> DV) * r:(DM -> TraceOp) -> DV

static member Op_DM_DV_DM : a:DM * b:DV * ff:(float32 [,] * float32 [] -> float32 [,]) * fd:(DM * DV -> DM) * df_da:(DM * DM * DM -> DM) * df_db:(DM * DV * DV -> DM) * df_dab:(DM * DM * DM * DV * DV -> DM) * r_d_d:(DM * DV -> TraceOp) * r_d_c:(DM * DV -> TraceOp) * r_c_d:(DM * DV -> TraceOp) -> DM

static member Op_DM_DV_DV : a:DM * b:DV * ff:(float32 [,] * float32 [] -> float32 []) * fd:(DM * DV -> DV) * df_da:(DV * DM * DM -> DV) * df_db:(DV * DV * DV -> DV) * df_dab:(DV * DM * DM * DV * DV -> DV) * r_d_d:(DM * DV -> TraceOp) * r_d_c:(DM * DV -> TraceOp) * r_c_d:(DM * DV -> TraceOp) -> DV

static member Op_DM_D_DM : a:DM * b:D * ff:(float32 [,] * float32 -> float32 [,]) * fd:(DM * D -> DM) * df_da:(DM * DM * DM -> DM) * df_db:(DM * D * D -> DM) * df_dab:(DM * DM * DM * D * D -> DM) * r_d_d:(DM * D -> TraceOp) * r_d_c:(DM * D -> TraceOp) * r_c_d:(DM * D -> TraceOp) -> DM

static member Op_DV_DM_DM : a:DV * b:DM * ff:(float32 [] * float32 [,] -> float32 [,]) * fd:(DV * DM -> DM) * df_da:(DM * DV * DV -> DM) * df_db:(DM * DM * DM -> DM) * df_dab:(DM * DV * DV * DM * DM -> DM) * r_d_d:(DV * DM -> TraceOp) * r_d_c:(DV * DM -> TraceOp) * r_c_d:(DV * DM -> TraceOp) -> DM

static member Op_DV_DM_DV : a:DV * b:DM * ff:(float32 [] * float32 [,] -> float32 []) * fd:(DV * DM -> DV) * df_da:(DV * DV * DV -> DV) * df_db:(DV * DM * DM -> DV) * df_dab:(DV * DV * DV * DM * DM -> DV) * r_d_d:(DV * DM -> TraceOp) * r_d_c:(DV * DM -> TraceOp) * r_c_d:(DV * DM -> TraceOp) -> DV

static member Op_D_DM_DM : a:D * b:DM * ff:(float32 * float32 [,] -> float32 [,]) * fd:(D * DM -> DM) * df_da:(DM * D * D -> DM) * df_db:(DM * DM * DM -> DM) * df_dab:(DM * D * D * DM * DM -> DM) * r_d_d:(D * DM -> TraceOp) * r_d_c:(D * DM -> TraceOp) * r_c_d:(D * DM -> TraceOp) -> DM

static member Pow : a:int * b:DM -> DM

static member Pow : a:DM * b:int -> DM

static member Pow : a:float32 * b:DM -> DM

static member Pow : a:DM * b:float32 -> DM

static member Pow : a:D * b:DM -> DM

static member Pow : a:DM * b:D -> DM

static member Pow : a:DM * b:DM -> DM

static member ReLU : a:DM -> DM

static member ReshapeToDV : a:DM -> DV

static member Round : a:DM -> DM

static member Sigmoid : a:DM -> DM

static member Sign : a:DM -> DM

static member Sin : a:DM -> DM

static member Sinh : a:DM -> DM

static member SoftPlus : a:DM -> DM

static member SoftSign : a:DM -> DM

static member Solve : a:DM * b:DV -> DV

static member SolveSymmetric : a:DM * b:DV -> DV

static member Sqrt : a:DM -> DM

static member StandardDev : a:DM -> D

static member Standardize : a:DM -> DM

static member Sum : a:DM -> D

static member Tan : a:DM -> DM

static member Tanh : a:DM -> DM

static member Trace : a:DM -> D

static member Transpose : a:DM -> DM

static member Variance : a:DM -> D

static member ZeroMN : m:int -> n:int -> DM

static member Zero : DM

static member ( + ) : a:int * b:DM -> DM

static member ( + ) : a:DM * b:int -> DM

static member ( + ) : a:float32 * b:DM -> DM

static member ( + ) : a:DM * b:float32 -> DM

static member ( + ) : a:DM * b:DV -> DM

static member ( + ) : a:DV * b:DM -> DM

static member ( + ) : a:D * b:DM -> DM

static member ( + ) : a:DM * b:D -> DM

static member ( + ) : a:DM * b:DM -> DM

static member ( / ) : a:int * b:DM -> DM

static member ( / ) : a:DM * b:int -> DM

static member ( / ) : a:float32 * b:DM -> DM

static member ( / ) : a:DM * b:float32 -> DM

static member ( / ) : a:D * b:DM -> DM

static member ( / ) : a:DM * b:D -> DM

static member ( ./ ) : a:DM * b:DM -> DM

static member ( .* ) : a:DM * b:DM -> DM

static member op_Explicit : d:float32 [,] -> DM

static member op_Explicit : d:DM -> float32 [,]

static member ( * ) : a:int * b:DM -> DM

static member ( * ) : a:DM * b:int -> DM

static member ( * ) : a:float32 * b:DM -> DM

static member ( * ) : a:DM * b:float32 -> DM

static member ( * ) : a:D * b:DM -> DM

static member ( * ) : a:DM * b:D -> DM

static member ( * ) : a:DV * b:DM -> DV

static member ( * ) : a:DM * b:DV -> DV

static member ( * ) : a:DM * b:DM -> DM

static member ( - ) : a:int * b:DM -> DM

static member ( - ) : a:DM * b:int -> DM

static member ( - ) : a:float32 * b:DM -> DM

static member ( - ) : a:DM * b:float32 -> DM

static member ( - ) : a:D * b:DM -> DM

static member ( - ) : a:DM * b:D -> DM

static member ( - ) : a:DM * b:DM -> DM

static member ( ~- ) : a:DM -> DM

Full name: DiffSharp.AD.Float32.DM

Full name: DiffSharp.AD.Float32.DM.mapCols

Full name: DiffSharp.Util.softmax

static member DM.Min : a:D * b:DM -> DM

static member DM.Min : a:DM * b:D -> DM

static member DM.Min : a:DM * b:DM -> DM

static member DM.Max : a:D * b:DM -> DM

static member DM.Max : a:DM * b:D -> DM

static member DM.Max : a:DM * b:DM -> DM

| InitUniform of D * D

| InitNormal of D * D

| InitRBM of D

| InitReLU

| InitSigmoid

| InitTanh

| InitStandard

| InitCustom of (int -> int -> D)

member InitDM : m:DM -> DM

member InitDM : m:int * n:int -> DM

override ToString : unit -> string

Full name: FeedforwardNets.Initializer

| D of float32

| DF of D * D * uint32

| DR of D * D ref * TraceOp * uint32 ref * uint32

interface IComparable

member Copy : unit -> D

override Equals : other:obj -> bool

member GetForward : t:D * i:uint32 -> D

override GetHashCode : unit -> int

member GetReverse : i:uint32 -> D

override ToString : unit -> string

member A : D

member F : uint32

member P : D

member PD : D

member T : D

member A : D with set

member F : uint32 with set

static member Abs : a:D -> D

static member Acos : a:D -> D

static member Asin : a:D -> D

static member Atan : a:D -> D

static member Atan2 : a:int * b:D -> D

static member Atan2 : a:D * b:int -> D

static member Atan2 : a:float32 * b:D -> D

static member Atan2 : a:D * b:float32 -> D

static member Atan2 : a:D * b:D -> D

static member Ceiling : a:D -> D

static member Cos : a:D -> D

static member Cosh : a:D -> D

static member Exp : a:D -> D

static member Floor : a:D -> D

static member Log : a:D -> D

static member Log10 : a:D -> D

static member LogSumExp : a:D -> D

static member Max : a:D * b:D -> D

static member Min : a:D * b:D -> D

static member Op_D_D : a:D * ff:(float32 -> float32) * fd:(D -> D) * df:(D * D * D -> D) * r:(D -> TraceOp) -> D

static member Op_D_D_D : a:D * b:D * ff:(float32 * float32 -> float32) * fd:(D * D -> D) * df_da:(D * D * D -> D) * df_db:(D * D * D -> D) * df_dab:(D * D * D * D * D -> D) * r_d_d:(D * D -> TraceOp) * r_d_c:(D * D -> TraceOp) * r_c_d:(D * D -> TraceOp) -> D

static member Pow : a:int * b:D -> D

static member Pow : a:D * b:int -> D

static member Pow : a:float32 * b:D -> D

static member Pow : a:D * b:float32 -> D

static member Pow : a:D * b:D -> D

static member ReLU : a:D -> D

static member Round : a:D -> D

static member Sigmoid : a:D -> D

static member Sign : a:D -> D

static member Sin : a:D -> D

static member Sinh : a:D -> D

static member SoftPlus : a:D -> D

static member SoftSign : a:D -> D

static member Sqrt : a:D -> D

static member Tan : a:D -> D

static member Tanh : a:D -> D

static member One : D

static member Zero : D

static member ( + ) : a:int * b:D -> D

static member ( + ) : a:D * b:int -> D

static member ( + ) : a:float32 * b:D -> D

static member ( + ) : a:D * b:float32 -> D

static member ( + ) : a:D * b:D -> D

static member ( / ) : a:int * b:D -> D

static member ( / ) : a:D * b:int -> D

static member ( / ) : a:float32 * b:D -> D

static member ( / ) : a:D * b:float32 -> D

static member ( / ) : a:D * b:D -> D

static member op_Explicit : d:D -> float32

static member ( * ) : a:int * b:D -> D

static member ( * ) : a:D * b:int -> D

static member ( * ) : a:float32 * b:D -> D

static member ( * ) : a:D * b:float32 -> D

static member ( * ) : a:D * b:D -> D

static member ( - ) : a:int * b:D -> D

static member ( - ) : a:D * b:int -> D

static member ( - ) : a:float32 * b:D -> D

static member ( - ) : a:D * b:float32 -> D

static member ( - ) : a:D * b:D -> D

static member ( ~- ) : a:D -> D

Full name: DiffSharp.AD.Float32.D

val int : value:'T -> int (requires member op_Explicit)

Full name: Microsoft.FSharp.Core.Operators.int

--------------------

type int = int32

Full name: Microsoft.FSharp.Core.int

--------------------

type int<'Measure> = int

Full name: Microsoft.FSharp.Core.int<_>

Full name: FeedforwardNets.Initializer.ToString

Full name: Microsoft.FSharp.Core.ExtraTopLevelOperators.sprintf

Full name: FeedforwardNets.Initializer.InitDM

type Rnd =

new : unit -> Rnd

static member Choice : a:'a0 [] -> 'a0

static member Choice : a:'a [] * probs:DV -> 'a

static member Choice : a:'a0 [] * probs:float32 [] -> 'a0

static member Normal : unit -> float32

static member Normal : mu:float32 * sigma:float32 -> float32

static member NormalD : unit -> D

static member NormalD : mu:D * sigma:D -> D

static member NormalDM : m:int * n:int -> DM

static member NormalDM : m:int * n:int * mu:D * sigma:D -> DM

...

Full name: Hype.Rnd

--------------------

new : unit -> Rnd

static member Rnd.UniformDM : m:int * n:int * max:D -> DM

static member Rnd.UniformDM : m:int * n:int * min:D * max:D -> DM

static member Rnd.NormalDM : m:int * n:int * mu:D * sigma:D -> DM

Full name: Microsoft.FSharp.Core.Operators.sqrt

val float32 : value:'T -> float32 (requires member op_Explicit)

Full name: Microsoft.FSharp.Core.Operators.float32

--------------------

type float32 = System.Single

Full name: Microsoft.FSharp.Core.float32

--------------------

type float32<'Measure> = float32

Full name: Microsoft.FSharp.Core.float32<_>

Full name: DiffSharp.AD.Float32.DM.init

Full name: FeedforwardNets.Initializer.InitDM

member Initializer.InitDM : m:int * n:int -> DM

Full name: FeedforwardNets.l

Full name: FeedforwardNets.p

module Params

from Hype

--------------------

type Params =

{Epochs: int;

Method: Method;

LearningRate: LearningRate;

Momentum: Momentum;

Loss: Loss;

Regularization: Regularization;

GradientClipping: GradientClipping;

Batch: Batch;

EarlyStopping: EarlyStopping;

ImprovementThreshold: D;

...}

Full name: Hype.Params

Full name: Hype.Params.Default

| Early of int * int

| NoEarly

override ToString : unit -> string

static member DefaultEarly : EarlyStopping

Full name: Hype.EarlyStopping

| Full

| Minibatch of int

| Stochastic

override ToString : unit -> string

member Func : (Dataset -> int -> Dataset)

Full name: Hype.Batch

| L1Loss

| L2Loss

| Quadratic

| CrossEntropyOnLinear

| CrossEntropyOnSoftmax

override ToString : unit -> string

member Func : (Dataset -> (DM -> DM) -> D)

Full name: Hype.Loss

union case Momentum.Momentum: D -> Momentum

--------------------

type Momentum =

| Momentum of D

| Nesterov of D

| NoMomentum

override ToString : unit -> string

member Func : (DV -> DV -> DV)

static member DefaultMomentum : Momentum

static member DefaultNesterov : Momentum

Full name: Hype.Momentum

| Constant of D

| Decay of D * D

| ExpDecay of D * D

| Schedule of DV

| Backtrack of D * D * D

| StrongWolfe of D * D * D

| AdaGrad of D

| RMSProp of D * D

override ToString : unit -> string

member Func : (int -> DV -> (DV -> D) -> D -> DV -> DV ref -> DV -> obj)

static member DefaultAdaGrad : LearningRate

static member DefaultBacktrack : LearningRate

static member DefaultConstant : LearningRate

static member DefaultDecay : LearningRate

static member DefaultExpDecay : LearningRate

static member DefaultRMSProp : LearningRate

static member DefaultStrongWolfe : LearningRate

Full name: Hype.LearningRate

Full name: FeedforwardNets.lhist

member Layer.Train : d:Dataset * par:Params -> D * D []

member Layer.Train : d:Dataset * v:Dataset -> D * D []

member Layer.Train : d:Dataset * v:Dataset * par:Params -> D * D []

Full name: FeedforwardNets.ll

module Array

from DiffSharp.Util

--------------------

module Array

from Microsoft.FSharp.Collections

Full name: Microsoft.FSharp.Collections.Array.map

val float : value:'T -> float (requires member op_Explicit)

Full name: Microsoft.FSharp.Core.Operators.float

--------------------

type float = System.Double

Full name: Microsoft.FSharp.Core.float

--------------------

type float<'Measure> = float

Full name: Microsoft.FSharp.Core.float<_>

Full name: RProvider.Helpers.namedParams

Full name: Microsoft.FSharp.Core.Operators.box

static member Axis : ?x: obj * ?at: obj * ?___: obj * ?side: obj * ?labels: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member abline : ?a: obj * ?b: obj * ?h: obj * ?v: obj * ?reg: obj * ?coef: obj * ?untf: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member arrows : ?x0: obj * ?y0: obj * ?x1: obj * ?y1: obj * ?length: obj * ?angle: obj * ?code: obj * ?col: obj * ?lty: obj * ?lwd: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member assocplot : ?x: obj * ?col: obj * ?space: obj * ?main: obj * ?xlab: obj * ?ylab: obj -> SymbolicExpression + 1 overload

static member axTicks : ?side: obj * ?axp: obj * ?usr: obj * ?log: obj * ?nintLog: obj -> SymbolicExpression + 1 overload

static member axis : ?side: obj * ?at: obj * ?labels: obj * ?tick: obj * ?line: obj * ?pos: obj * ?outer: obj * ?font: obj * ?lty: obj * ?lwd: obj * ?lwd_ticks: obj * ?col: obj * ?col_ticks: obj * ?hadj: obj * ?padj: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member axis_Date : ?side: obj * ?x: obj * ?at: obj * ?format: obj * ?labels: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member axis_POSIXct : ?side: obj * ?x: obj * ?at: obj * ?format: obj * ?labels: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member barplot : ?height: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

static member barplot_default : ?height: obj * ?width: obj * ?space: obj * ?names_arg: obj * ?legend_text: obj * ?beside: obj * ?horiz: obj * ?density: obj * ?angle: obj * ?col: obj * ?border: obj * ?main: obj * ?sub: obj * ?xlab: obj * ?ylab: obj * ?xlim: obj * ?ylim: obj * ?xpd: obj * ?log: obj * ?axes: obj * ?axisnames: obj * ?cex_axis: obj * ?cex_names: obj * ?inside: obj * ?plot: obj * ?axis_lty: obj * ?offset: obj * ?add: obj * ?args_legend: obj * ?___: obj * ?paramArray: obj [] -> SymbolicExpression + 1 overload

...

Full name: RProvider.graphics.R

R functions for base graphics

R.plot(?x: obj, ?y: obj, ?___: obj, ?paramArray: obj []) : RDotNet.SymbolicExpression

Generic X-Y Plotting

Full name: Microsoft.FSharp.Core.Operators.ignore

Full name: FeedforwardNets.cc

type SoftmaxClassifier =

inherit Classifier

new : l:Layer -> SoftmaxClassifier

new : f:(DM -> DM) -> SoftmaxClassifier

override Classify : x:DV -> int

override Classify : x:DM -> int []

Full name: Hype.SoftmaxClassifier

--------------------

new : f:(DM -> DM) -> SoftmaxClassifier

new : l:Layer -> SoftmaxClassifier

Full name: FeedforwardNets.pred

override SoftmaxClassifier.Classify : x:DM -> int []

Full name: FeedforwardNets.real

member Classifier.ClassificationError : x:DM * y:int [] -> float32

Full name: FeedforwardNets.cls

union case DV.DV: float32 [] -> DV

--------------------

module DV

from DiffSharp.AD.Float32

--------------------

type DV =

| DV of float32 []

| DVF of DV * DV * uint32

| DVR of DV * DV ref * TraceOp * uint32 ref * uint32

member Copy : unit -> DV

member GetForward : t:DV * i:uint32 -> DV

member GetReverse : i:uint32 -> DV

member GetSlice : lower:int option * upper:int option -> DV

member ToArray : unit -> D []

member ToColDM : unit -> DM

member ToMathematicaString : unit -> string

member ToMatlabString : unit -> string

member ToRowDM : unit -> DM

override ToString : unit -> string

member Visualize : unit -> string

member A : DV

member F : uint32

member Item : i:int -> D with get

member Length : int

member P : DV

member PD : DV

member T : DV

member A : DV with set

member F : uint32 with set

static member Abs : a:DV -> DV

static member Acos : a:DV -> DV

static member AddItem : a:DV * i:int * b:D -> DV

static member AddSubVector : a:DV * i:int * b:DV -> DV

static member Append : a:DV * b:DV -> DV

static member Asin : a:DV -> DV

static member Atan : a:DV -> DV

static member Atan2 : a:int * b:DV -> DV

static member Atan2 : a:DV * b:int -> DV

static member Atan2 : a:float32 * b:DV -> DV

static member Atan2 : a:DV * b:float32 -> DV

static member Atan2 : a:D * b:DV -> DV

static member Atan2 : a:DV * b:D -> DV

static member Atan2 : a:DV * b:DV -> DV

static member Ceiling : a:DV -> DV

static member Cos : a:DV -> DV

static member Cosh : a:DV -> DV

static member Exp : a:DV -> DV

static member Floor : a:DV -> DV

static member L1Norm : a:DV -> D

static member L2Norm : a:DV -> D

static member L2NormSq : a:DV -> D

static member Log : a:DV -> DV

static member Log10 : a:DV -> DV

static member LogSumExp : a:DV -> D

static member Max : a:DV -> D

static member Max : a:D * b:DV -> DV

static member Max : a:DV * b:D -> DV

static member Max : a:DV * b:DV -> DV

static member MaxIndex : a:DV -> int

static member Mean : a:DV -> D

static member Min : a:DV -> D

static member Min : a:D * b:DV -> DV

static member Min : a:DV * b:D -> DV

static member Min : a:DV * b:DV -> DV

static member MinIndex : a:DV -> int

static member Normalize : a:DV -> DV

static member OfArray : a:D [] -> DV

static member Op_DV_D : a:DV * ff:(float32 [] -> float32) * fd:(DV -> D) * df:(D * DV * DV -> D) * r:(DV -> TraceOp) -> D

static member Op_DV_DM : a:DV * ff:(float32 [] -> float32 [,]) * fd:(DV -> DM) * df:(DM * DV * DV -> DM) * r:(DV -> TraceOp) -> DM

static member Op_DV_DV : a:DV * ff:(float32 [] -> float32 []) * fd:(DV -> DV) * df:(DV * DV * DV -> DV) * r:(DV -> TraceOp) -> DV

static member Op_DV_DV_D : a:DV * b:DV * ff:(float32 [] * float32 [] -> float32) * fd:(DV * DV -> D) * df_da:(D * DV * DV -> D) * df_db:(D * DV * DV -> D) * df_dab:(D * DV * DV * DV * DV -> D) * r_d_d:(DV * DV -> TraceOp) * r_d_c:(DV * DV -> TraceOp) * r_c_d:(DV * DV -> TraceOp) -> D

static member Op_DV_DV_DM : a:DV * b:DV * ff:(float32 [] * float32 [] -> float32 [,]) * fd:(DV * DV -> DM) * df_da:(DM * DV * DV -> DM) * df_db:(DM * DV * DV -> DM) * df_dab:(DM * DV * DV * DV * DV -> DM) * r_d_d:(DV * DV -> TraceOp) * r_d_c:(DV * DV -> TraceOp) * r_c_d:(DV * DV -> TraceOp) -> DM

static member Op_DV_DV_DV : a:DV * b:DV * ff:(float32 [] * float32 [] -> float32 []) * fd:(DV * DV -> DV) * df_da:(DV * DV * DV -> DV) * df_db:(DV * DV * DV -> DV) * df_dab:(DV * DV * DV * DV * DV -> DV) * r_d_d:(DV * DV -> TraceOp) * r_d_c:(DV * DV -> TraceOp) * r_c_d:(DV * DV -> TraceOp) -> DV

static member Op_DV_D_DV : a:DV * b:D * ff:(float32 [] * float32 -> float32 []) * fd:(DV * D -> DV) * df_da:(DV * DV * DV -> DV) * df_db:(DV * D * D -> DV) * df_dab:(DV * DV * DV * D * D -> DV) * r_d_d:(DV * D -> TraceOp) * r_d_c:(DV * D -> TraceOp) * r_c_d:(DV * D -> TraceOp) -> DV

static member Op_D_DV_DV : a:D * b:DV * ff:(float32 * float32 [] -> float32 []) * fd:(D * DV -> DV) * df_da:(DV * D * D -> DV) * df_db:(DV * DV * DV -> DV) * df_dab:(DV * D * D * DV * DV -> DV) * r_d_d:(D * DV -> TraceOp) * r_d_c:(D * DV -> TraceOp) * r_c_d:(D * DV -> TraceOp) -> DV

static member Pow : a:int * b:DV -> DV

static member Pow : a:DV * b:int -> DV

static member Pow : a:float32 * b:DV -> DV

static member Pow : a:DV * b:float32 -> DV

static member Pow : a:D * b:DV -> DV

static member Pow : a:DV * b:D -> DV

static member Pow : a:DV * b:DV -> DV

static member ReLU : a:DV -> DV

static member ReshapeToDM : m:int * a:DV -> DM

static member Round : a:DV -> DV

static member Sigmoid : a:DV -> DV

static member Sign : a:DV -> DV

static member Sin : a:DV -> DV

static member Sinh : a:DV -> DV

static member SoftMax : a:DV -> DV

static member SoftPlus : a:DV -> DV

static member SoftSign : a:DV -> DV

static member Split : d:DV * n:seq<int> -> seq<DV>

static member Sqrt : a:DV -> DV

static member StandardDev : a:DV -> D

static member Standardize : a:DV -> DV

static member Sum : a:DV -> D

static member Tan : a:DV -> DV

static member Tanh : a:DV -> DV

static member Variance : a:DV -> D

static member ZeroN : n:int -> DV

static member Zero : DV

static member ( + ) : a:int * b:DV -> DV

static member ( + ) : a:DV * b:int -> DV

static member ( + ) : a:float32 * b:DV -> DV

static member ( + ) : a:DV * b:float32 -> DV

static member ( + ) : a:D * b:DV -> DV

static member ( + ) : a:DV * b:D -> DV

static member ( + ) : a:DV * b:DV -> DV

static member ( &* ) : a:DV * b:DV -> DM

static member ( / ) : a:int * b:DV -> DV

static member ( / ) : a:DV * b:int -> DV

static member ( / ) : a:float32 * b:DV -> DV

static member ( / ) : a:DV * b:float32 -> DV

static member ( / ) : a:D * b:DV -> DV

static member ( / ) : a:DV * b:D -> DV

static member ( ./ ) : a:DV * b:DV -> DV

static member ( .* ) : a:DV * b:DV -> DV

static member op_Explicit : d:float32 [] -> DV

static member op_Explicit : d:DV -> float32 []

static member ( * ) : a:int * b:DV -> DV

static member ( * ) : a:DV * b:int -> DV

static member ( * ) : a:float32 * b:DV -> DV

static member ( * ) : a:DV * b:float32 -> DV

static member ( * ) : a:D * b:DV -> DV

static member ( * ) : a:DV * b:D -> DV

static member ( * ) : a:DV * b:DV -> D

static member ( - ) : a:int * b:DV -> DV

static member ( - ) : a:DV * b:int -> DV

static member ( - ) : a:float32 * b:DV -> DV

static member ( - ) : a:DV * b:float32 -> DV

static member ( - ) : a:D * b:DV -> DV

static member ( - ) : a:DV * b:D -> DV

static member ( - ) : a:DV * b:DV -> DV

static member ( ~- ) : a:DV -> DV

Full name: DiffSharp.AD.Float32.DV

Full name: DiffSharp.AD.Float32.DV.visualizeAsDM

Full name: FeedforwardNets.clss

Full name: FeedforwardNets.train

Full name: FeedforwardNets.hypertrain

static member Minimize : f:(DV -> D) * w0:DV -> DV * D * DV [] * D []

static member Minimize : f:(DV -> D) * w0:DV * par:Params -> DV * D * DV [] * D []

static member Train : f:(DV -> DM -> DM) * w0:DV * d:Dataset -> DV * D * DV [] * D []

static member Train : f:(DV -> DV -> DV) * w0:DV * d:Dataset -> DV * D * DV [] * D []

static member Train : f:(DV -> DV -> D) * w0:DV * d:Dataset -> DV * D * DV [] * D []

static member Train : f:(DV -> DM -> DM) * w0:DV * d:Dataset * v:Dataset -> DV * D * DV [] * D []

static member Train : f:(DV -> DM -> DM) * w0:DV * d:Dataset * par:Params -> DV * D * DV [] * D []

static member Train : f:(DV -> DV -> DV) * w0:DV * d:Dataset * v:Dataset -> DV * D * DV [] * D []

static member Train : f:(DV -> DV -> DV) * w0:DV * d:Dataset * par:Params -> DV * D * DV [] * D []

static member Train : f:(DV -> DV -> D) * w0:DV * d:Dataset * v:Dataset -> DV * D * DV [] * D []

...

Full name: Hype.Optimize

static member Optimize.Minimize : f:(DV -> D) * w0:DV * par:Params -> DV * D * DV [] * D []

Full name: DiffSharp.AD.Float32.DV.create

Full name: FeedforwardNets.lr

Full name: FeedforwardNets.lrlr

Full name: DiffSharp.AD.Float32.DV.toArray